How Data Centers Became the Hidden Backbone of Our Modern World

History's insights to navigating AI's next bottleneck

The 19th century was transformed by railroads. The 20th century, by electricity. The last four decades have been shaped by data centers. Now AI is pushing our infrastructure to its limits, disrupting labor and power markets, shifting geopolitics, all while putting new sci-fi capabilities in everyone’s pockets.

Today, millions of GPUs need power to keep up with insatiable demand. The scale is unprecedented, but the shape of this buildout is familiar: networks that cluster in unlikely places, expensive hardware that seeks full utilization, and a collision with our power system. The question isn’t if the buildout will continue, but how we use this opportunity to usher in a new step change in our infrastructure.

We traced history to see what we can learn from the past and where the next unlock may be hiding. What follows are three stories from Data Centers: The Hidden Backbone of Our Modern World.

Why is the global epicenter of data centers in rural Virginia?

Hubs and fiber.

In the early 1990s, a group of network providers in the Virginia area got together over a beer and decided to connect their networks. They selected the corner of a parking garage to stack servers and swap traffic. This building, known as MAE-East, soon became the most important building for the commercial internet. If you sent an email from London to Paris, it likely crossed the Atlantic through that D.C. garage. The same pattern repeated in downtown LA when One Wilshire, a bland office building from the mid-1960s, became the internet’s West Coast hub—and the most valuable commercial real estate in LA, selling for a record $437.5 million in 2013.

As demand for dense connectivity outgrew the garage or a single office building, the industry coalesced around data center regions. None more famous than the “data center alley” that grew out of farm land in Ashburn, Virginia. AOL located its core connectivity there in the early 1990s, MAE-East was relocated, and Loudoun County created an economic flywheel—making it as easy to build data centers as a normal office park. Today, Loudoun County data center zoned land trades at an astonishing $6M per acre.

Every hub needs its spokes. For data centers, it’s all about fiber—hair-thin strands of glass that carry every text, image, and video around the world. During the dot-com bubble of the ‘90s, we laid way too much fiber. By 2000, there was enough fiber to wrap around the Earth thousands of times, yet less than 10% of it was lit up. The rest sat dark, waiting to be connected.

That overbuild became a subsidy for Web 2.0, smartphones, and the hyperscale cloud. Today, nearly 600 active submarine cables carry 99% of international internet traffic.

How did we manage through scale in the past?

We stopped wasting capacity.

In the 1960s and ‘70s, computers lived in a “glass house”—expensive mainframes run by trained operators via punch cards. IBM was king—the NVIDIA of the 1970s. The invention of time-sharing was the first unlock: many users, one machine, swapping in slices of the CPU. A student on a teletype had direct access to a computer for the first time.

The rise of the PC led to “client-server” architectures where companies had server rooms built on commodity hardware. Servers sat idle most of the time. Along came virtualization: suddenly, dozens of workloads could run on the same hardware, each believing it had its own machine.

Amazon took it further. In 2006, EC2 let developers rent a virtual server by the hour. You could launch an internet startup with a credit card, paying only for what you used. Containers and serverless designs have pushed the abstraction even further from the physical machines underneath.

Each step pushed utilization up. The more usage, the lower the per unit costs, and the more economies of scale rewarded centralization.

Today’s AI chips turn the knob again. A single H100-class GPU now costs on the order of a small car. They are bought in 2 or 8-GPU nodes wired together with ultra-fast interconnects. A single 8-GPU training box can be a half-million-dollar asset. At that price point, utilization is existential.

How did power become the bottleneck?

While the current scale of the power problem is unprecedented, power and data centers are not a new tension.

In the early 2000s, Christian Belady walked into NTT Docomo, the Japanese cellular operator, with “10 best practices” for their data center efficiency: separate hot and cold aisles, seal the gaps, tighten airflow. Three months later, back in Tokyo, their verdict: We implemented everything. It didn’t work. We are going back to the way it was.

They complained—the hot aisle was even hotter! But from a physics perspective, that’s ideal: the hotter the hot aisle, the more effectively heat is being removed from the data center. The problem was goal-setting; no one was looking at a common metric.

On the 12 hour flight home, Belady invented PUE—Power Usage Effectiveness. Total facility power divided by the power reaching IT equipment. In the mid-2000s, that number was typically 2.5 or greater. For every watt powering servers, another 1.5 watts vanished into overhead.

Over the next decade, hyperscalers attacked that ratio. Shifting to more efficient cooling architectures, rethinking power distribution inside the building, and building in better climates. The result? PUE fell toward 1.1, meaning almost all of the electricity goes to chips, not chillers.

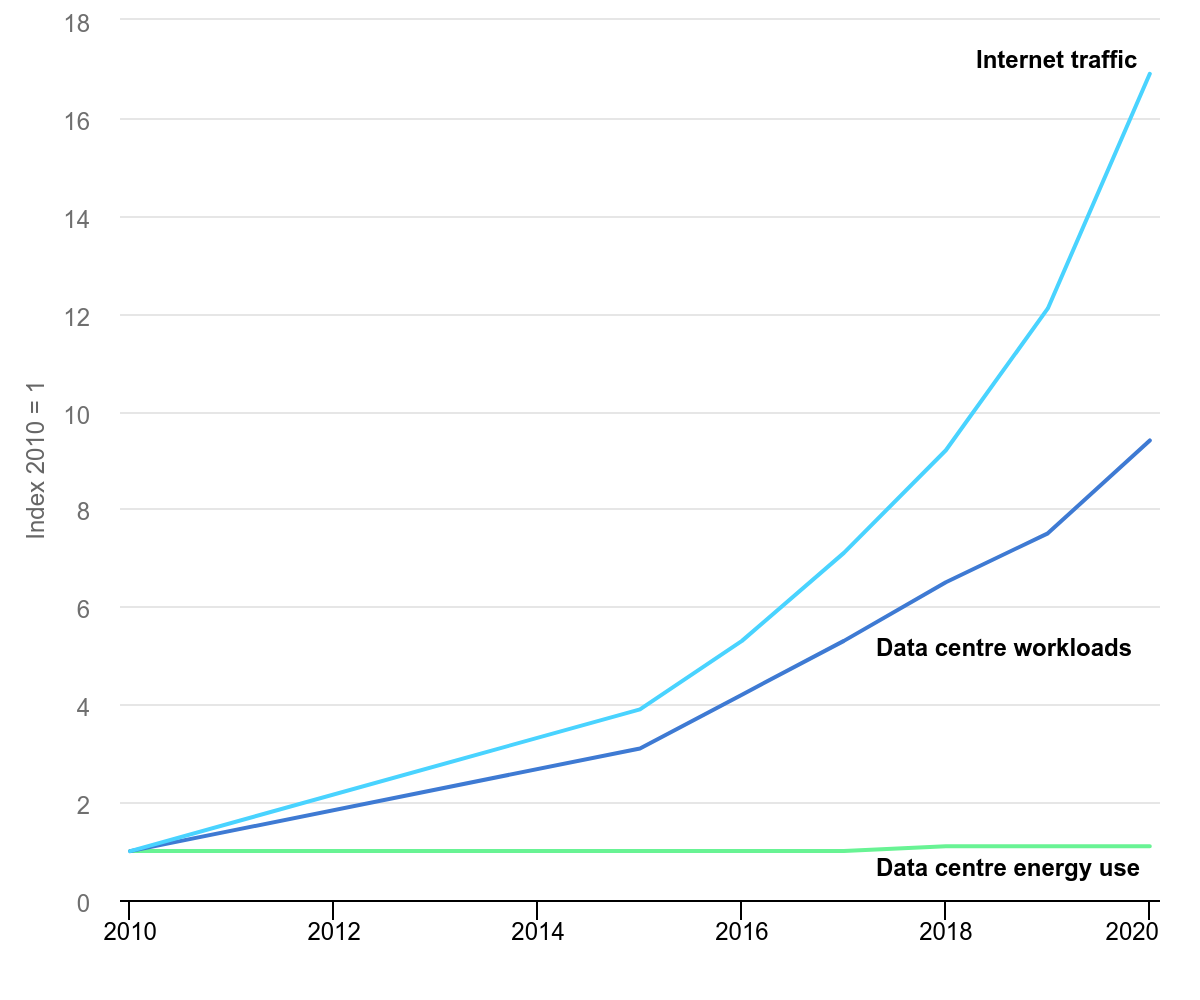

From 2010 to 2020, cloud, streaming, and mobile sent internet traffic surging 17x, yet energy use barely moved. Those inefficient, underutilized server closets were deprecated and migrated to efficient hyperscale services.

But today’s AI data centers are 10-100x more power hungry than in the past. 50-100MW data centers are rounding errors in the face of 5GW builds. Yesterday’s solutions will not solve today’s problems. And yet, it is wise to look for rhymes: Are today’s chips fully utilized? Are we using the full power capacity of DCs? Can we get more power out of our existing grid?

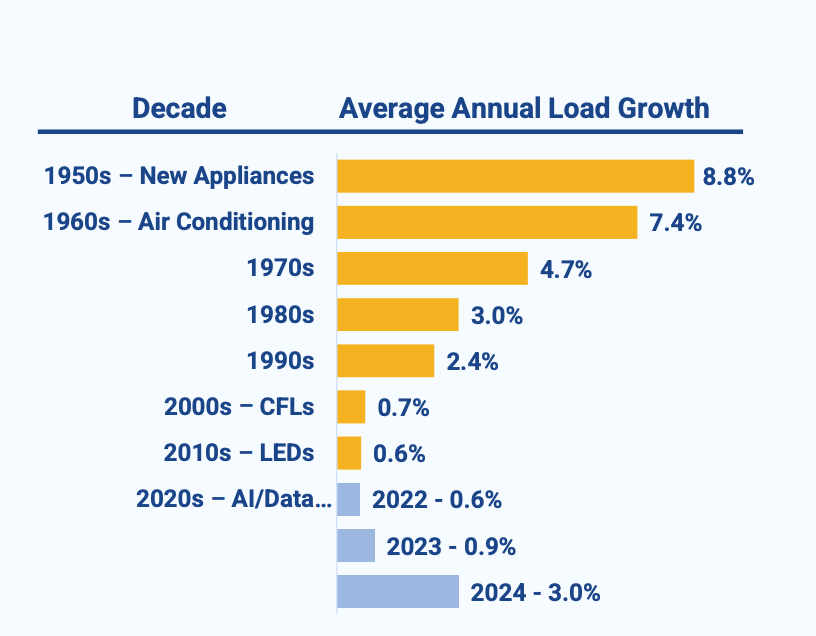

False panic about the energy needs of IT equipment is as old as the internet. What is new this time is speed and scale. After 15 years of flat national load growth, we’re seeing a confluence of factors—largely industrial growth, transportation electrification, and AI data centers—kick off a new scale of electricity demand.

A step-change moment

It’s easy to read history and marvel at the leap between the world before and after the car or the light bulb. But we’re living through a shift like that right now. When we were kids, we could barely load a text page over dial-up. Now our kids stream HD video mid-flight or chat with the latest reasoning model.

Living through this moment means holding two truths in tension. We enjoy a world where compute keeps getting cheaper—unlocking products and discoveries that would have sounded like science fiction a decade ago. And we inherit the hard problems this technology creates: strained grids, environmental degradation, and real impacts on communities where these buildings land.

Every era’s constraint became the next era’s unlock. The next decade is an invitation to reengineer everything from chips to the power grid so that the era ahead is fundamentally more abundant and sustainable.

If you want the full dive into how we got here and what comes next, join over 100K others and listen to The Stepchange Show in any podcast player.

Authored by Anay Shah & Ben Shwab Eidelson, co-founders and managing partners of Stepchange Ventures. Stepchange invests in ambitious companies building software to accelerate energy abundance and upgrade critical infrastructure. They’ve partnered with 20 companies to date, including multiple co-investments with MCJ.

🎙️ Inevitable Podcast

🏡 Aleks Gampel, COO and Co-founder of Cuby, explains how his company’s mobile microfactories can address the housing crisis by cutting costs, waste, and carbon while using mostly unskilled labor to build climate-resilient homes. Listen to the episode here.

🎥 The Lean Back

👩💻 Climate Jobs

Check out the Job Openings space in the MCJ Collective Member Hub or the MCJ Job Board for more.

I&C Technician at Aalo (Idaho Falls, ID)

Cloud Security Engineer at Base Power (Austin, TX)

Principal Site Reliability Engineer at Crusoe (San Francisco, CA)

Cloud Infrastructure Engineer at Crusoe (San Francisco, CA)

Chief of Staff at Jaro Fleet (Oakland, CA)

Procurement Specialist at Lightship (Broomfield, CO)

Supply and Demand Planner at Mill (San Bruno, CA)

Accounts Payable Clerk at Moment Energy (Coquitlam, Bc)

Director of Remote Sensing at Overstory (Remote)

📹 MCJ is looking for a Content Specialist. If you (or somebody you know) love podcasts, climate + energy tech, fast-moving storytelling, and turning raw ideas into engaging multimedia, this is the gig for you. Apply here.

🎉 We’ve facilitated 100 successful hires across the MCJ portfolio. If you’re exploring roles in climate and energy startups, don’t overlook VC firms—we’re close to founders, see hiring needs early, and help match talent to roles. Interested? Fill out our talent form here.

MCJ Newsletter is a FREE email curating news, jobs, Inevitable podcast episodes, and other noteworthy happenings in the MCJ Collective member community.

💭 If you have feedback or items you’d like to include, feel free to reach out.

🤝 If you’d like to join the MCJ Collective, apply today.

💡 Have a climate-related event or content topic that you’d like to see in the MCJ newsletter? Email us at content@mcj.vc

Great historical framing here. The virtualization unlock really changed everything once utilization became the primary constraint. What stood out to me was the PUE optimization story, cause we're kinda seeing the inverse problem now with AI workloads where you've got such high power density that traditional cooling can't even keep up. I worked on a hyperscale deployment last quarter and the conversation shifted from PUE to literaly how many megawats per rack you can physicaly deliver.